Nutanix 5.0 Asterisk was released in the commercial version about 2 weeks ago. Then, about a week ago, it became available in the CE version. I have already upgraded my home lab to run CE 5.0, and wanted to take a few minutes to talk about some of the key updates in this new release. There have been a LOT of changes, but I’m just going to focus on the things that I think are big news. Most of this stuff applies to both the commercial and the CE release.

For those of you that are wondering, I have this development environment installed nested under ESXi. This new release works great in that configuration, and is a quick and easy deployment.

- Self Service Portal. This provides multi-tenancy type functionality via a nice web GUI. You link up with a directory service like AD, and then create users, roles, and projects. You assign rights to the users/roles, and assign resources to the projects. The users can then log into this portal and manage their own resources. Cool! This feature is apparently a bit buggy in the current release of CE.

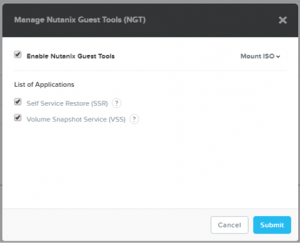

- Self Service Restore. If you install the Nutanix Guest Tools in your guests (and you should, just like VMware Tools) then you will now have the ability to do File Level Restores from your guests. This works with the self service portal to allow your users to restore their own files. Neato!

- REST 2.0. This is big for me, as it will enable me to add a lot more to the Nutanix Android App I’ve released. Look for updates on that front soon!

- Prism Central. CE basically now has access to “Prism Pro” level of features. Cool! This includes Prism Central, which is a separate install for single pane of glass management of multiple clusters, and provides things like capacity forecasting and customizable dashboards.

- AFS 2.0 includes new updates to the built in scale-out file server. This adds quotas, async replication, and a re-balancing feature.

- NVMe support. This was already available in the commercial version, now in CE as well. As a bonus, if the device supports VT-d, it will be presented as a pass-through device, which is better for performance.

- Affinity Policies. This is specific to CE or people running Acropolis in production. You can now create affinity and anti-affinity policies. Think VMware DRS. Right now, you can only configure VM to Host affinity policies in the GUI. This release also supports VM to VM Anti-Affinity, but you can only configure this via CLI.

- ABS updates. This is block services, where we are basically using the cluster as an iSCSI SAN. While skeptical at first, I’ve encountered a couple of situations in production datacenters where ABS solved problems for customers. Some of the new stuff here includes CHAP authentication, session load balancing, and online grow of block volumes.

- Cluster Latency Visualization. This provides some nice eye candy, but also a bunch of useful information about your storage utilization. It includes important information like your IO latency over time, read and write sizes, as well as what resources are responding to the IO requests (RAM, SSD, HDD).

One thing that is in the commercial version that is NOT in CE is single node RF2. The commercial version can now be deployed on a single node, for the purposes of a backup target. Its essentially a single node cluster that does local data protection. The main reason for it is to get your data safely off of your production cluster, so it isn’t designed to run VMs directly on this single node box. Today, this feature doesn’t work for CE. I’d love to see this make its way to CE though, because it would essentially give some drive redundancy to a single node CE cluster, like the one I use for development.

There are a bunch of other things updated in 5.0, but these are some of the things I’m excited about right now. As I play with it more and get it deployed at more customer sites, I’m sure more things will pop up.

Categories: Datacenter Homelab Hyperconverged Storage Virtualization

1 reply ›