“If it ain’t broke, don’t fix it.”

– IT Manager, Fortune 500 manufacturing firm“Once servers are deployed in our environment, we never touch the firmware again.”

– IT Director, Private Equity Firm“Upgrading code is too risky. We strive to minimize risk in our datacenter.”

– IT Director, Global Software Company

Those of us who have been working with technology long enough have run into all these personalities. These are the leaders in environments where an end user can really back themselves into a corner. Typically, these opinions begin when a code upgrade goes wrong and causes some impact. I get it, that happens. There is more than just uptime at stake from failing to maintain a reasonable level of currency in your datacenter, though.

Lets take a look at a few real life situations that could have been avoided by staying up to date. All names, places, dates, and some details have been changed to protect the embarrassed.

Interoperability.

I had a customer this year who was running their storage array on ancient code. The firmware on the fibre channel switches was equally outdated. This was an older VMware environment. Sure, the gear had been running great for a couple years. At some point the admins hear enough about all the new stuff that has been added to VMware since their last update. “Wouldn’t these features be nice to have?” So they set about upgrading their VMware compute cluster to 6.5.

I had a customer this year who was running their storage array on ancient code. The firmware on the fibre channel switches was equally outdated. This was an older VMware environment. Sure, the gear had been running great for a couple years. At some point the admins hear enough about all the new stuff that has been added to VMware since their last update. “Wouldn’t these features be nice to have?” So they set about upgrading their VMware compute cluster to 6.5.

I have seen it happen over and over again. We make sure our Hypervisors and firmware are all at supported levels when we first deploy a solution, but this compatibility is forgotten as time goes on. Pretty soon, some end users forget entirely that these products do indeed talk to each other, and need to continue to speak the same language. VMware and storage arrays have a number of standards and protocols they must keep up with in this communication, such as VAAI, VASA, and SCSI.

In this example, they upgraded just VMware, never thinking that making such a leap on the VMware side without thinking about the rest of their environment may result in compatibility issues. The end result was downtime. This was the result of a conscious effort to “reduce risk” by touching as few components as possible. A better strategy may have been to spend some time planning to validate that your end state following the upgrades would be a supported configuration.

You want to be conservative with the code on your storage arrays? Probably a good idea, but you need to consider your environment as a whole when planning upgrades.

Security.

![]() I’m going to use an older example here, because I think its funny. I don’t have any proof of this, though I’d love to hear from you if you saw this happen first hand.

I’m going to use an older example here, because I think its funny. I don’t have any proof of this, though I’d love to hear from you if you saw this happen first hand.

If you have been working with storage for a while, you probably had yourself an EMC Clariion or derivative at some point. Even admins who had worked with Clariions for a long time sometimes didn’t realize that the arrays ran on Windows. The story I’ve heard is that the arrays were themselves vulnerable to some of those early viruses targeting Windows – I don’t recall if it was Code Red, Blaster, Sasser, etc. There was no way to put antivirus on the array, and the vulnerability was promptly fixed.

We all know that security drives a lot of the updates for the technology we use every day. Even if your gear is in a well secured environment, you never can be sure about what the next big threat may be. Just ask all the large enterprise companies who had major data breaches this year, such as Marriott, Facebook, Macy’s, Adidas, Sears, or any of the others listed here.

Stay Out of the Corner.

I saw this one a lot in 2018. A customer deferred maintenance on their environment despite warnings about upcoming “end of support” and “end of sale” dates. If you have a chunk of your datacenter that is not completely homogenous, you can find yourself in a situation where only part of your environment can move on with you into the future.

I saw this one a lot in 2018. A customer deferred maintenance on their environment despite warnings about upcoming “end of support” and “end of sale” dates. If you have a chunk of your datacenter that is not completely homogenous, you can find yourself in a situation where only part of your environment can move on with you into the future.

In one example, a customer had a storage array with about 250 drives in it that was approaching the end of its support life. It was old enough that the next generation product was already approaching end of sale. Some of the disk drives attached to this old storage array could have been moved forward to the next generation product, because a single generation of backwards compatibility was built in and many of the drives were added over time. The customer deferred maintenance a few months too long, and by then the next generation product was no longer available for sale. This put them into the position where they had to move forward 2 generations, so they could not connect their existing drives to the new controllers. They had to throw out perfectly good drives and enclosures because they didn’t plan ahead well enough, and missed the cutoff by just a few months.

There is something to be said about getting the most you can out of an existing investment, but taken to the extreme, it can end up costing you more money to run the outdated equipment when it forces you to make purchases you hadn’t planned for. Speaking of how staying up to date can save you money…

Saving Money.

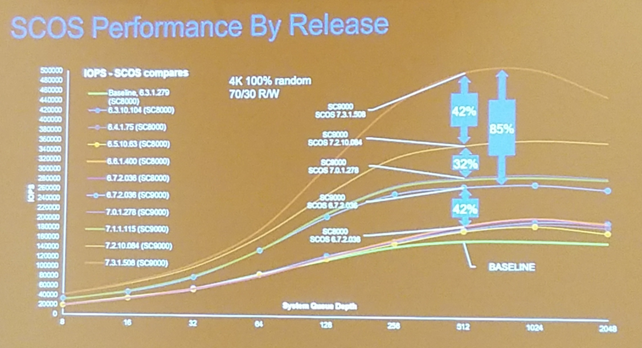

What? Keeping my datacenter relatively current can save me money? Yup. Check out the grainy screenshot below that I took from Dell EMC World 2018:

In this screenshot, we can see that given the SAME HARDWARE, we saw an increase in IOPS capacity (or a reduction in latency, depending on how you want to think about it) of about 85% between version 6.7 and 7.3. So, simply by applying updated version of software, you could significantly improve the performance of your storage array. This means your batch jobs should run faster, or your over-taxed system can get some breathing room. By staying on a modern code level, you can significantly defer purchases and get more out of the system you have. These sorts of performance improvements are not isolated to storage arrays. We see the same phenomenon from products like Nutanix and VMware.

There are plenty of more obvious ways that updates can save you money as well. How about by adding features that improve your efficiency? Lets take the above storage array example again. In version 6.7, there was no dedupe capability. Fast forward to 7.3, and there are significant data reduction improvements. By staying on a modern code level, you will have earned the ability to store more data on the hardware you already have. This allows you to defer capacity purchases, which is valuable as the cost per GB of media is always dropping. Again, we saw this same thing happen across other products including Nutanix and VMware.

These are just a few examples of how keeping abreast of the capabilities in how your platform evolves over time, and judiciously applying these updates can save you money.

Enough For Now.

I’m not saying you need to be running at the bleeding edge of everything in your datacenter. I think you want to be deliberate and thoughtful about how you stay up to date. I also know that sometimes there are constraints outside of your control, such as budget. For every one of these, however, there are IT admins and leaders who choose to fall behind, often at their own peril.

Which camp do you fall into? Let me know in the comments below.

Categories: Datacenter Tech Soup

Leave a Reply